一、Claude Skills 是什么?

Claude Skills(技能)是一种让 Claude AI 掌握特定领域专业知识和工作流程的机制。

核心概念

技能本质: 一个包含指令、脚本和资源的文件夹,Claude 在需要时自动加载和使用。

工作原理:

用户请求 → Claude识别需要哪个技能 → 加载技能内容 → 执行任务 → 返回结果关键特点

特点说明渐进式披露只在需要时加载,不占用多余上下文可组合性多个技能可以自动协同工作可移植性同一技能可在网页、API、Claude Code 中使用可执行性可以包含 Python/JavaScript 等可执行代码

使用场景

✅ 品牌规范应用 → 自动应用公司品牌指南到所有文档

✅ 数据分析流程 → 按照特定方法分析和可视化数据

✅ 提示词优化 → 按照标准架构优化AI提示词

✅ 文档生成 → 创建符合格式要求的专业文档

✅ 代码审查 → 按照团队标准审查代码二、适用范围和类型

2.1 技能的两种类型

类型1:Anthropic 官方预构建技能

特点:

- ✅ 由 Anthropic 开发和维护

- ✅ 所有用户开箱即用

- ✅ 免费使用

- ✅ 持续更新

可用的官方技能:

技能ID名称功能pptxPowerPoint创建和编辑演示文稿xlsxExcel创建和处理电子表格(含公式)docxWord创建和编辑Word文档

使用方式:

python

# API中直接引用,无需上传

container={

"skills": [{

"type": "anthropic", # 官方技能

"skill_id": "xlsx", # 技能ID

"version": "latest"

}]

}类型2:自定义技能

特点:

- ✅ 自己创建和维护

- ✅ 适配特定业务需求

- ✅ 包含专有知识和流程

- ⚠️ 需要手动上传

创建方式:

- 网页端上传(个人使用)

- API上传(团队共享)

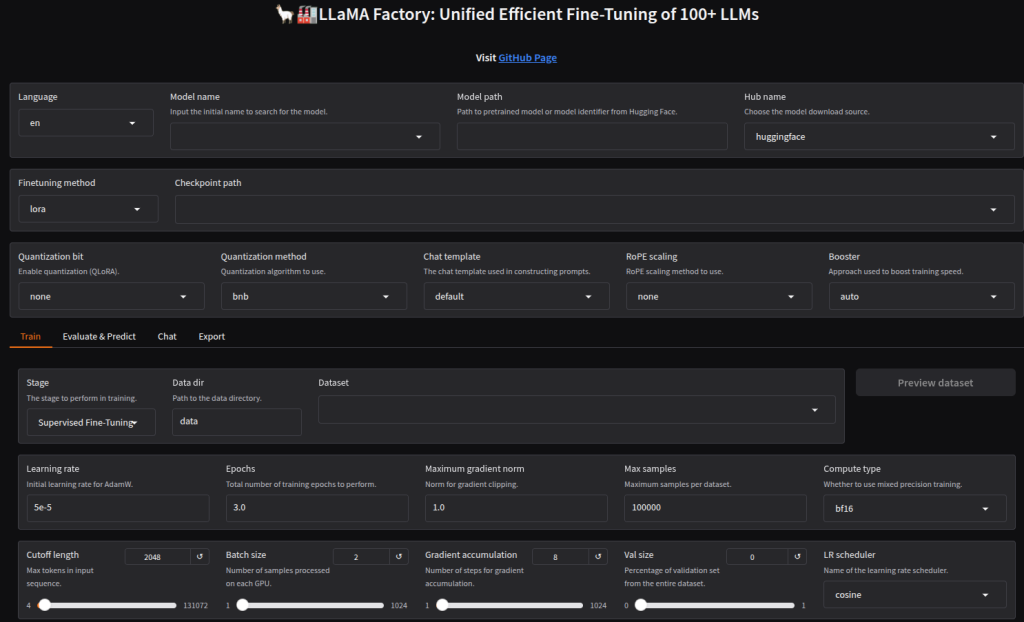

2.2 使用方式对比

方式1:网页端使用

适用场景:

- ✅ 个人学习和测试

- ✅ 快速原型验证

- ✅ 不需要编程技能

- ✅ 临时一次性需求

上传位置:

Settings → Capabilities → Skills → Upload skill限制:

- ❌ 上传的技能仅个人私有

- ❌ 无法与团队共享

- ❌ 每个人需要单独上传

- ❌ 无法程序化管理

方式2:API调用

适用场景:

- ✅ 团队协作和共享

- ✅ 生产环境部署

- ✅ 需要版本控制

- ✅ 自动化工作流

核心优势:

- ✅ 工作区范围内自动共享(这是最大优势)

- ✅ 程序化管理(CRUD操作)

- ✅ 支持版本控制

- ✅ 适合持续集成

对比总结:

特性网页端API共享范围个人私有工作区共享上传方式界面操作代码上传管理方式手动操作程序化CRUD技术要求无需编程需要Python基础版本控制手动重新上传自动版本管理适用场景个人测试团队生产

三、如何创建自定义技能

3.1 文件结构要求

最小结构(必需)

your-skill-name/

└── SKILL.md # 唯一必需的文件完整结构(推荐)

your-skill-name/

├── SKILL.md # 核心指令文件(必需)

├── scripts/ # 可执行脚本(可选)

│ ├── processor.py

│ └── validator.js

├── resources/ # 资源文件(可选)

│ ├── template.xlsx

│ ├── logo.png

│ └── style.css

└── REFERENCE.md # 补充文档(可选)3.2 SKILL.md 格式规范

必需的YAML前置元数据

yaml

---

name: skill-name-here

description: Clear description of when to use this skill

---⚠️ 关键要点

允许的字段(仅5个):

字段必需性限制说明name✅ 必需最多64字符技能的唯一标识符description✅ 必需最多1024字符最重要:Claude根据这个决定是否使用license❌ 可选-许可证类型(如MIT)allowed-tools❌ 可选-允许使用的工具列表metadata❌ 可选-额外信息(版本、作者等)

❌ 常见错误:不能直接使用 version 字段!

yaml

# ❌ 错误写法

---

name: my-skill

description: My skill

version: 1.0.0 # 这会导致上传失败!

---

# ✅ 正确写法

---

name: my-skill

description: My skill

metadata:

version: "1.0.0" # 版本信息放在metadata中

---3.3 实战案例:提示词架构标准技能

这是我们刚刚成功创建的真实案例!

文件结构

prompt-architecture-standard/

├── SKILL.md # 核心技能文件

└── CASES.md # 案例参考文档SKILL.md 内容(精简版)

markdown

---

name: prompt-std

description: 专业提示词架构标准。当用户需要编写、优化或评审AI提示词时使用。适用于创建结构清晰、目标明确、避免歧义的高质量提示词。

---

# 提示词架构标准

## 核心原则

撰写提示词的本质是将用户的真实需求转化为AI可理解的明确指令。

优秀的提示词应当:

1. 直击核心目标,避免冗余描述

2. 规则清晰,避免歧义和理解分歧

3. 结构简洁,确保AI输出稳定可靠

## 标准架构

### 1. 目标说明

明确说明用户的真实目的和期望结果。

示例:

❌ 错误:你是一个助手,你可以帮我写文章,比如写科技文章...

✅ 正确:根据用户提供的主题和要点,生成结构完整的专业文章

### 2. 核心规则

列出必须遵守的关键规则,每条规则应简洁明确。

### 3. 约束条件

明确说明禁止事项和边界条件。

### 4. 输出格式

精确定义期望的输出结构和格式。

## 特殊场景:编写Agent提示词

### 禁止使用的格式

❌ 不使用Markdown格式(#、##、**、-等)

❌ 不使用XML标签

### 推荐做法

✅ 使用纯文本,通过换行和空行分隔段落

✅ 使用大写字母标注章节

✅ 保持线性结构,易于解析

## 常见错误与纠正

### 错误1:堆砌示例

❌ 问题:提供10+个示例

✅ 纠正:用1-2条规则替代

### 错误2:规则模糊

❌ 问题:"尽可能专业"

✅ 纠正:"使用行业术语"

## 评审检查清单

□ 目标是否一句话说清楚?

□ 每条规则是否无歧义?

□ 是否存在冗余描述?

□ Agent提示词是否避免了Markdown?

## 快速参考

核心要点:

→ 目标明确,一句话说清

→ 规则具体,无歧义

→ 结构清晰,易理解

→ 避免冗余,防分歧

记住:优秀的提示词应该像精确的程序指令,而不是模糊的建议。打包成ZIP

bash

# 在命令行中打包

cd /path/to/parent-directory

zip -r prompt-std.zip prompt-architecture-standard/

# 或在Windows中:右键文件夹 → 发送到 → 压缩文件上传限制

- ✅ ZIP文件大小必须 < 8MB

- ✅ 必须包含 SKILL.md 文件

- ✅ YAML前置元数据必须正确

- ✅ 所有文件使用UTF-8编码

四、API 上传和使用

4.1 环境准备

第1步:安装SDK

bash

pip install anthropic第2步:获取API密钥

访问 https://console.anthropic.com/settings/keys 获取密钥

第3步:设置环境变量

bash

export ANTHROPIC_API_KEY="sk-ant-xxxxx"4.2 上传技能到API

方式1:从文件路径上传

python

from anthropic import Anthropic

import os

# 初始化客户端(必须设置Beta headers)

client = Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY"),

default_headers={

"anthropic-beta": "code-execution-2025-08-25,files-api-2025-04-14,skills-2025-10-02"

}

)

# 上传技能

skill = client.skills.create(

files=[

{"path": "prompt-std/SKILL.md"},

{"path": "prompt-std/CASES.md"}

]

)

print(f"✅ 技能上传成功!")

print(f"Skill ID: {skill.skill_id}")

print(f"名称: {skill.name}")方式2:从内存内容上传

python

# 读取文件内容

with open("prompt-std/SKILL.md", "rb") as f:

skill_content = f.read()

# 上传

skill = client.skills.create(

files=[

{

"path": "prompt-std/SKILL.md",

"content": skill_content

}

]

)

print(f"Skill ID: {skill.skill_id}")

# 输出示例:skill_01AbCdEfGhIjKlMnOpQrStUv上传后的状态

- ✅ 自动在工作区范围内共享

- ✅ 团队所有成员都可以使用

- ✅ 无需每个人单独上传

- ✅ 支持版本管理

4.3 在API中使用技能

完整示例:使用自定义技能

python

from anthropic import Anthropic

import os

# 初始化客户端

client = Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY"),

default_headers={

"anthropic-beta": "code-execution-2025-08-25,skills-2025-10-02"

}

)

# 使用技能进行对话

response = client.messages.create(

model="claude-sonnet-4-5-20250929",

max_tokens=4096,

betas=[

"code-execution-2025-08-25",

"skills-2025-10-02"

],

container={

"skills": [

{

"type": "custom", # 自定义技能

"skill_id": "skill_01AbCdEfGhIjKlMnOpQrStUv", # 替换为你的ID

"version": "latest"

}

]

},

messages=[{

"role": "user",

"content": "帮我写一个生成产品描述的提示词,要求输出包含标题、特点列表和使用场景"

}],

tools=[{

"type": "code_execution_20250825",

"name": "code_execution"

}]

)

print(response.content[0].text)组合使用:官方技能 + 自定义技能

python

response = client.messages.create(

model="claude-sonnet-4-5-20250929",

max_tokens=4096,

betas=["code-execution-2025-08-25", "skills-2025-10-02"],

container={

"skills": [

# 官方Excel技能

{

"type": "anthropic",

"skill_id": "xlsx",

"version": "latest"

},

# 官方PowerPoint技能

{

"type": "anthropic",

"skill_id": "pptx",

"version": "latest"

},

# 你的自定义技能

{

"type": "custom",

"skill_id": "skill_01AbCdEfGhIjKlMnOpQrStUv",

"version": "latest"

}

]

},

messages=[{

"role": "user",

"content": "分析这份销售数据,创建一个演示文稿,按照我们的品牌标准"

}],

tools=[{"type": "code_execution_20250825", "name": "code_execution"}]

)特点:

- ✅ 最多可以同时使用 8个技能

- ✅ Claude自动识别何时使用哪个技能

- ✅ 技能之间可以协同工作

4.4 管理技能(CRUD操作)

列出所有技能

python

# 列出所有自定义技能

skills = client.skills.list(source="custom")

for skill in skills.data:

print(f"名称: {skill.name}")

print(f"ID: {skill.skill_id}")

print(f"描述: {skill.description}")

print("-" * 60)获取特定技能

python

skill = client.skills.retrieve("skill_01AbCdEfGhIjKlMnOpQrStUv")

print(f"技能名称: {skill.name}")

print(f"最新版本: {skill.latest_version_id}")创建新版本

python

# 更新技能内容

new_version = client.skills.versions.create(

skill_id="skill_01AbCdEfGhIjKlMnOpQrStUv",

files=[

{"path": "prompt-std/SKILL.md"} # 更新后的文件

]

)

print(f"新版本ID: {new_version.id}")删除技能

python

# 1. 先删除所有版本

versions = client.skills.versions.list("skill_01AbCdEfGhIjKlMnOpQrStUv")

for version in versions.data:

client.skills.versions.delete("skill_01AbCdEfGhIjKlMnOpQrStUv", version.id)

# 2. 然后删除技能本身

client.skills.delete("skill_01AbCdEfGhIjKlMnOpQrStUv")

print("✅ 技能已删除")五、技术架构分析

5.1 是否使用虚拟机?

答案:是的! Skills运行在隔离的虚拟机容器中。

容器环境特点

┌─────────────────────────────────────┐

│ Claude Skills 容器环境 │

├─────────────────────────────────────┤

│ 操作系统: Ubuntu 24 │

│ Python: 预装常用库 │

│ Node.js: 预装npm包 │

│ 文件系统: /skills/{skill-name}/ │

│ 工作目录: /home/claude │

├─────────────────────────────────────┤

│ 限制: │

│ ❌ 无网络访问 │

│ ❌ 无法安装新包(运行时) │

│ ✅ 可以执行代码 │

│ ✅ 可以读写文件 │

└─────────────────────────────────────┘环境隔离性

特性说明进程隔离每个请求独立容器文件系统临时文件系统,请求结束后重置网络隔离无法访问外部API包预装Python、Node.js常用包已预装无持久化不保存会话间的数据

技能加载流程

1. 用户发起请求

↓

2. Claude分析请求,识别需要的技能

↓

3. 创建新的隔离容器

↓

4. 将技能文件复制到容器中

路径:/skills/{skill-name}/

↓

5. Claude读取 SKILL.md 获取指令

↓

6. 如有需要,执行技能中的脚本

(脚本在容器内执行,无法访问外网)

↓

7. 生成响应并返回

↓

8. 容器销毁,文件系统重置5.2 渐进式披露机制

核心设计原则: 只在需要时加载内容,避免浪费上下文。

三层加载机制

启动阶段(0 tokens消耗)

├─ 第1层:元数据加载

│ └─ 加载所有技能的 name 和 description

│ 写入系统提示词

│ Claude知道"有哪些技能可用"

│

用户请求后(按需消耗tokens)

├─ 第2层:SKILL.md加载

│ └─ Claude判断需要某个技能

│ 读取该技能的完整SKILL.md

│ 加载到上下文

│

执行过程中(按需消耗tokens)

└─ 第3层:额外资源加载

└─ 如需参考文档,读取REFERENCE.md

如需模板文件,加载template.xlsx

如需执行脚本,运行processor.py优势:

- ✅ 可以安装100+个技能而不影响性能

- ✅ 只有被使用的技能消耗tokens

- ✅ 脚本执行不占用上下文(只返回结果)

5.3 代码执行安全性

沙箱限制

python

# ✅ 允许的操作

import pandas as pd # 使用预装的包

df = pd.read_csv("data.csv") # 读取容器内文件

result = df.sum() # 进行计算

print(result) # 输出结果

# ❌ 禁止的操作

import requests # ❌ 无法安装新包

requests.get("https://...") # ❌ 无法访问网络

os.system("rm -rf /") # ❌ 无法影响宿主系统安全措施

安全层措施网络隔离完全无网络访问文件隔离只能访问容器内文件进程隔离每个请求独立容器时间限制执行超时自动终止资源限制CPU/内存受限

5.4 预装包列表

Python包

python

# 数据处理

pandas, numpy, scipy

# Excel/文档

openpyxl, python-docx, python-pptx, PyPDF2

# 可视化

matplotlib, seaborn, plotly

# 其他

requests(仅限容器内使用), beautifulsoup4, pillowNode.js包

javascript

// 文件处理

fs, path

// 常用工具

lodash, axios(仅限容器内)⚠️ 重要: 无法在运行时安装新包,所有依赖必须使用预装包。

六、最佳实践总结

6.1 技能设计原则

✅ DO – 应该做的

- 保持专注

- 一个技能只做一件事

- 避免”万能技能”

- 描述清晰

yaml

# ✅ 好的描述

description: 当用户需要创建符合公司品牌标准的PPT时使用

# ❌ 模糊的描述

description: 帮助用户创建文档- 使用示例精简

- 最多2个示例

- 优先用规则代替示例

- 结构模块化

- 大型内容拆分到多个文件

- 使用 REFERENCE.md 存放补充信息

❌ DON’T – 不应该做的

- 不要硬编码敏感信息

yaml

# ❌ 危险

metadata:

api_key: "sk-xxx"

# ✅ 安全

# 通过环境变量或MCP连接传递- 不要使用不允许的YAML字段

yaml

# ❌ 错误

version: 1.0.0

author: John

# ✅ 正确

metadata:

version: "1.0.0"

author: "John"- 不要依赖外部网络

python

# ❌ 无法工作

import requests

requests.get("https://api.example.com")

# ✅ 使用预装包和本地数据

import pandas as pd

df = pd.read_csv("local_data.csv")6.2 选择网页版还是API?

决策流程图

需要团队共享?

├─ 是 → 使用API上传

│ └─ 优势:工作区自动共享

│

└─ 否 → 需要版本控制?

├─ 是 → 使用API上传

│ └─ 优势:程序化管理

│

└─ 否 → 需要编程集成?

├─ 是 → 使用API上传

│ └─ 优势:代码自动化

│

└─ 否 → 使用网页版上传

└─ 优势:简单快速具体建议

使用网页版,如果:

- ✅ 个人学习和测试

- ✅ 快速原型验证

- ✅ 不需要编程技能

- ✅ 临时一次性需求

使用API,如果:

- ✅ 团队协作项目(最重要)

- ✅ 生产环境部署

- ✅ 需要CI/CD集成

- ✅ 需要版本管理

6.3 常见问题速查

Q1: 技能不被触发?

可能原因:

- description不够明确

- 技能开关未启用

- 请求不够明确

解决方案:

python

# 让请求更明确

"使用提示词架构标准,帮我优化这个提示词..."Q2: 上传失败?

检查清单:

- ZIP文件 < 8MB

- 包含 SKILL.md

- YAML格式正确(无version字段)

- name 和 description 都存在

- 文件使用UTF-8编码

Q3: 如何调试技能?

方法:

- 在Claude的”思维过程”中查看

- Claude会显示”正在读取XX技能”

- 根据反馈调整description

Q4: 技能之间会冲突吗?

答案: 不会

- Claude智能识别需要哪些技能

- 多个技能可以协同工作

- 每个技能独立运行

七、快速参考

7.1 YAML模板

yaml

---

name: your-skill-name

description: Clear and specific description of when to use this skill

---

# Your Skill Name

## Purpose

[What this skill does]

## Usage

[When to use it]

## Instructions

[Step-by-step guide]

## Examples

[1-2 minimal examples if needed]7.2 Python上传模板

python

from anthropic import Anthropic

import os

client = Anthropic(

api_key=os.environ.get("ANTHROPIC_API_KEY"),

default_headers={

"anthropic-beta": "code-execution-2025-08-25,files-api-2025-04-14,skills-2025-10-02"

}

)

skill = client.skills.create(

files=[{"path": "your-skill/SKILL.md"}]

)

print(f"Skill ID: {skill.skill_id}")7.3 API使用模板

python

response = client.messages.create(

model="claude-sonnet-4-5-20250929",

max_tokens=4096,

betas=["code-execution-2025-08-25", "skills-2025-10-02"],

container={

"skills": [{

"type": "custom",

"skill_id": "your-skill-id",

"version": "latest"

}]

},

messages=[{"role": "user", "content": "your request"}],

tools=[{"type": "code_execution_20250825", "name": "code_execution"}]

)八、总结

核心要点

- Skills本质: 可重用的专业知识包,Claude按需加载

- 两种类型: 官方预构建(xlsx/pptx等)+ 自定义

- 两种使用方式: 网页版(个人)+ API(团队共享)

- 关键文件: SKILL.md(必需),YAML必须正确

- 运行环境: 隔离的虚拟机容器,无网络访问

- 最大优势: API上传后工作区自动共享

技术特点

- ✅ 渐进式披露(不浪费上下文)

- ✅ 容器隔离(安全可靠)

- ✅ 可组合(多技能协同)

- ✅ 可移植(跨平台使用)

使用建议

- 🎯 个人测试:网页版快速上手

- 🚀 团队生产:API集成部署

- 📝 描述清晰:决定技能触发

- 🔧 保持专注:一个技能一个任务